Compressing Random Forest Models with over 90% Reduction in Size

With the latest advancements in the field of AI, new techniques and algorithms like transformers, GANs, etc. have emerged stealing the limelight from traditional techniques(knock knock GenZ incoming ¬‿¬). While there have been significant advancements with these algorithms in specific domains that provide state-of-the-art performance, let’s not overlook the enduring power and reliability of traditional algorithms. They may seem rusty, but their proven track record and versatility continue to make them invaluable in various domains.

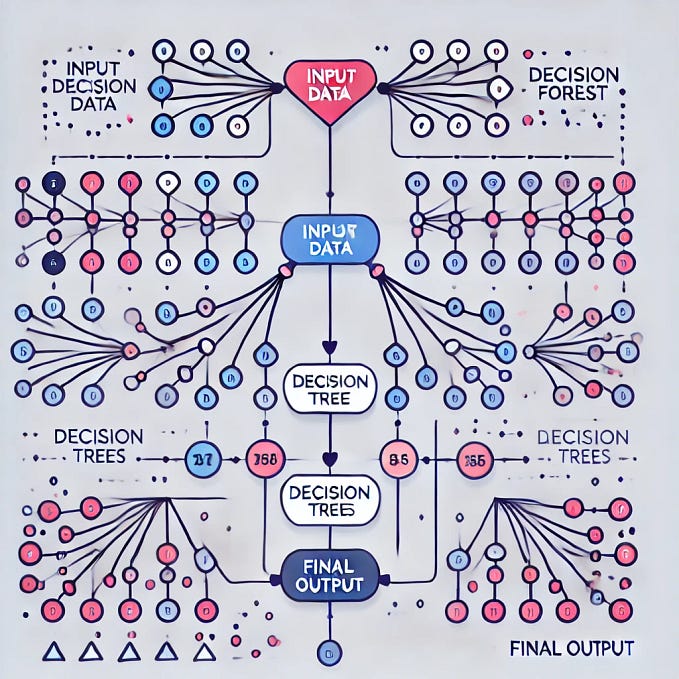

Random Forest Models, which may seem like your grandfather’s futile old stick(ツ) is still a popular technique in the realm of ensemble learning that offers a compelling edge over typical artificial neural networks (ANNs) when it comes to handling numerical data. Despite their simplicity and intuitive nature, random forests are beneficial in several areas. Their robustness to overfitting ensures better predictions, especially in noisy or complex datasets. Additionally, their ability to capture non-linear relationships within the data, makes them versatile and effective in a wide range of real-world applications.

However, these models can sometimes be costly in terms of storage. As the dimensionality of the input features grows, the size of the model can significantly increase, occasionally reaching gigabytes just to model a couple of thousand entries of the provided input features and sadly there are no intuitive strategies to compress or quantize these models ;-;

Therefore to get relief from such a stressful situation I’ve formulated a novel solution to compress such models, slashing their size by over 90% while still preserving the original model’s accuracy. The approach is pretty intuitive and straightforward and works with all kinds of Random Forest models(regression, classification, multi input/output, etc.)

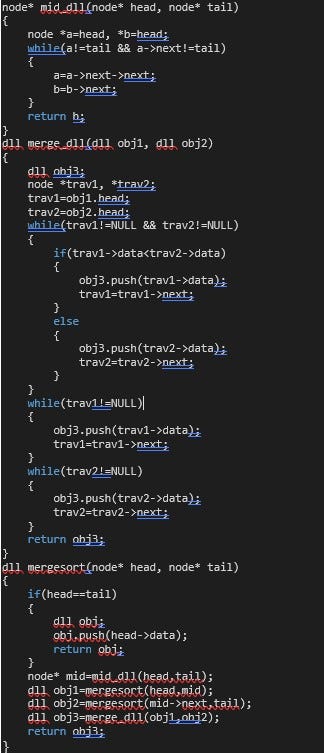

As Random Forest models are binary trees in nature and can be stored as a dictionary in python if you’re brave enough(•̀ᴗ•́ ), so we start off by extracting the tree’s content(features, nodes, thresholds, values). We can then reduce the decimal precision of the tree's thresholds and leaf node values to trim the non-required trailing decimal digits.

from sklearn.tree import _tree

import numpy as np

import json

import gzip

from joblib import load

def extract_tree_structure(decision_tree, feature_names, precision=4):

"""

Extracts and returns the structure of a decision tree in a recursive dictionary format.

This function traverses a decision tree and extracts information about each node, including

the feature used for splitting (if any), the threshold for splitting, and the values at the leaf nodes.

The structure is returned as a nested dictionary, where decision nodes contain information about

the feature name ('n'), threshold ('t'), and recursive structures for the left ('l') and right ('r') branches.

Leaf nodes contain the values ('v'). All numeric values are rounded to the specified precision.

Parameters:

- decision_tree (DecisionTreeClassifier or DecisionTreeRegressor): A trained decision tree.

- feature_names (list of str): A list containing the names of the features used in the decision tree.

- precision (int, optional): The number of decimal places to round numeric values to. Default is 4.

Returns:

- dict: A nested dictionary representing the structure of the decision tree.

"""

tree_ = decision_tree.tree_

feature_name = [

feature_names[i] if i != _tree.TREE_UNDEFINED else "undefined!"

for i in tree_.feature

]

thresholds = np.around(tree_.threshold, decimals=precision)

values = np.around(tree_.value, decimals=precision)

def recurse(node, depth):

if tree_.feature[node] != _tree.TREE_UNDEFINED:

name = feature_name[node]

threshold = thresholds[node]

left = recurse(tree_.children_left[node], depth + 1)

right = recurse(tree_.children_right[node], depth + 1)

return {'n': name, 't': np.around(threshold, decimals=precision), 'l': left, 'r': right}

else:

temp = np.around(values[node], decimals=precision)

return {'v': temp.tolist()}

return recurse(0, 1)model_path = "path/to/random/forest/model.joblib"

model = load(model_path)

feature_names = [i for i in range(model.n_features_in_)]

model_dict = [extract_tree_structure(estimator, feature_names, precision=precision) for estimator in model.estimators_]The resulting dictionary can then be saved in JSON format and this can be further zipped with best-in-class algorithms.

with open("some/path.json", 'w') as f:

json.dump(model_dict, f)

with open("some/path.json", 'r') as f:

json_data = f.read()

with gzip.open("some/path.json.gz", 'wt', encoding='UTF-8') as zipfile:

zipfile.write(json_data)For obtaining the predictions from the JSON a.k.a the Random Forest Model, we can utilize a simple recursive tree traversal logic ฅ/ᐠ. ̫ .ᐟ\ฅ

╾━╤デ╦︻

My most civil threat for you is to head over to the GitHub Repo and enjoy the entire code and hands-on comparison of the outputs from the original and JSON model.

https://github.com/K-prog/Compressing-Random-Forest-Models

Curious to know how it works…

Thanks for joining in on this journey of pounding Random Forests (^o^)ノ